1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

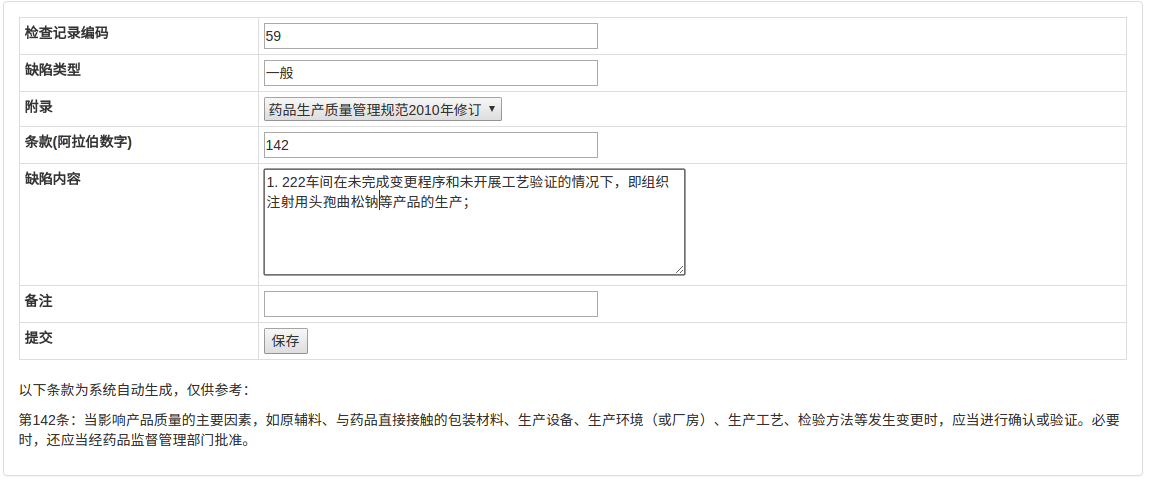

59

60

61

62

63

64

65

66

67

68

69

|

import sys

import os

import numpy as np

import jieba

import re

from sklearn.naive_bayes import MultinomialNB

from sklearn.neighbors import KNeighborsClassifier

from sklearn.externals import joblib

import argparse

import psycopg2

VOCABLIST_FILE = 'vocablist.npy'

TRAINMAT_FILE = 'trainmat.npy'

CLASSVEC_FILE = 'classvec.npy'

CLF_FIT_FILE = 'clf_fit.pkl'

def seg_sentence(sentence):

chinese_punctuation = (',','“','”',':',';','。','(',')','《','》',',','.',';',' ','、','等','的','在','与','之','上','将','中','一','二','三','四','五','六','七','八''九','十','为','了','——','—','-')

s = re.sub("[A-Za-z0-9\[\`\~\!\@\#\$\^\&\*\(\)\=\|\{\}\'\:\;\'\,\[\]\.\<\>\/\?\~\!\@\#\\\&\*\%]", "", sentence.strip())

seg_list = jieba.cut(s,cut_all=False)

seg_list = [x for x in seg_list if not x.replace('.','').replace('-','').isdigit()]

seg_list = [x for x in seg_list if x not in chinese_punctuation]

return seg_list

def setOfWords2Vec(vocabList,inputSet):

returnVec = [0]*len(vocabList)

for word in inputSet:

if word in vocabList:

returnVec[vocabList.index(word)] = 1

return returnVec

def make_txt_out(result,output):

appendix = int(result/1000)

clause = int(result%1000)

rows = ''

try:

conn = psycopg2.connect(database="dbname",user="username",password="passwd",port="port")

cursor = conn.cursor()

cursor.execute("""select appendix_name,snumber,content from gmpclause where appendix={0} and snumber={1}""".format(appendix,clause))

rows = cursor.fetchall()

except Exception as e:

print(e)

if rows:

appendix_name,snumber,content = rows[0][0],rows[0][1],rows[0][2]

result_str = ''

if appendix_name != 'GMP':

result_str += '附录:' + appendix_name + ', '

result_str += '第{0}条:{1}'.format(snumber,content)

with open(output,'w') as fh:

fh.write(result_str)

if __name__ == "__main__":

parser = argparse.ArgumentParser()

parser.add_argument('-o','--output',help='结果输出文件')

parser.add_argument('-d','--defect',help='缺陷描述')

args = parser.parse_args()

output = args.output

sentence = args.defect

vocab_list = np.load(VOCABLIST_FILE)

vocab_list = vocab_list.tolist()

defect_vec = np.array(setOfWords2Vec(vocab_list,seg_sentence(sentence)))

defect_vec = defect_vec.reshape(1,defect_vec.shape[0])

clf = joblib.load(CLF_FIT_FILE)

clf_result = clf.predict(defect_vec)

result = clf_result[0]

make_txt_out(result,output)

|