1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

|

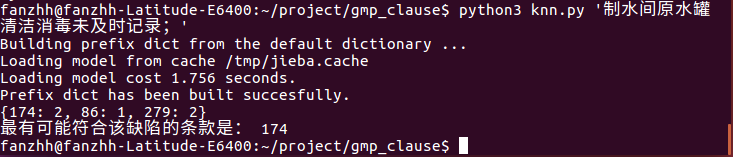

import sys

import os

import numpy as np

import jieba

import re

import csv

import operator

VOCABLIST_FILE = 'vocablist.npy'

TRAINMAT_FILE = 'trainmat.npy'

CLASSVEC_FILE = 'classvec.npy'

SAMPLE_FILE = './defect_sample_1610010806.csv'

def seg_sentence(sentence):

"""

分词。使用结巴分词,https://github.com/fxsjy/jieba 。

"""

chinese_punctuation = (',','“','”',':',';','。','(',')','《','》',',','.',';',' ','、','等','的','在','与','之','上','将','中','一','二','三','四','五','六','七','八''九','十','为','了','——','—','-')

s = re.sub("[A-Za-z0-9\[\`\~\!\@\#\$\^\&\*\(\)\=\|\{\}\'\:\;\'\,\[\]\.\<\>\/\?\~\!\@\#\\\&\*\%]", "", sentence.strip())

seg_list = jieba.cut(s,cut_all=False)

seg_list = [x for x in seg_list if not x.replace('.','').replace('-','').isdigit()]

seg_list = [x for x in seg_list if x not in chinese_punctuation]

return seg_list

def loadDataSet():

"""

导入数据,创建样本。返回缺陷列表及对应分类。

"""

defect_list = []

classVec = []

with open(SAMPLE_FILE) as csvfile:

reader = csv.reader(csvfile)

for row in reader:

seg_list = seg_sentence(row[1])

defect_list.append(seg_list)

classVec.append(int(row[2]))

return defect_list,classVec

def setOfWords2Vec(vocabList,inputSet):

"""

输入参数为词汇表及某个文档,输出文档向量,向量的每一个

元素为1或0,分别表示词汇表中的词在输入文档中是否出现。

"""

returnVec = [0]*len(vocabList)

for word in inputSet:

if word in vocabList:

returnVec[vocabList.index(word)] = 1

return returnVec

def createVocabList(dataSet):

"""

创建一个包含在所有缺陷中出现的不重复的词列表。

"""

vocabSet = set([])

for document in dataSet:

vocabSet = vocabSet | set(document)

return list(vocabSet)

def classify0(inX,dataSet,labels,k):

dataSetSize = dataSet.shape[0]

diffMat = np.tile(inX,(dataSetSize,1)) - dataSet

sqDiffMat = diffMat**2

sqDistances = sqDiffMat.sum(axis=1)

distances = sqDistances**0.5

sortedDistIndicies = distances.argsort()

classCount = {}

for i in range(k):

voteIlabel = labels[sortedDistIndicies[i]]

classCount[voteIlabel] = classCount.get(voteIlabel,0) + 1

print(classCount)

sortedClassCount = sorted(classCount.items(),key=operator.itemgetter(1),reverse=True)

return sortedClassCount[0][0]

def testingKNN(defect_in):

if (os.path.isfile(VOCABLIST_FILE)) and (os.path.isfile(CLASSVEC_FILE)):

myVocabList = np.load(VOCABLIST_FILE)

myVocabList = myVocabList.tolist()

class_vec = np.load(CLASSVEC_FILE)

else:

defect_list, class_vec = loadDataSet()

myVocabList = createVocabList(defect_list)

np.save(VOCABLIST_FILE,myVocabList)

np.save(CLASSVEC_FILE,class_vec)

if os.path.isfile(TRAINMAT_FILE):

trainMat = np.load(TRAINMAT_FILE)

else:

trainMat = []

for defect in defect_list:

trainMat.append(setOfWords2Vec(myVocabList,defect))

np.save(TRAINMAT_FILE,trainMat)

thisDefect = np.array(setOfWords2Vec(myVocabList,seg_sentence(defect_in)))

clause = classify0(thisDefect,np.array(trainMat),np.array(class_vec),5)

return clause

if __name__ == "__main__":

if len(sys.argv) <= 1:

print("您没有输入缺陷。")

sys.exit(1)

sentence = sys.argv[1]

clause = testingKNN(sentence)

print('最有可能符合该缺陷的条款是:',clause)

|